Portable VR equipment by njb design®

Connects marketing and technology,

3D media and reality - njb design®

- established 2008

- joint venture for digital media since 2012

- standardised processes

- modular workflows

- long term setup

Comprehensive knowledge and many years of experience

- research in 3D media

- evaluation of applications for presentation and marketing purposes

- implementation of new developments in professional workflows

- design of assets and applications for all types of 3D media

- production of 3D animations, interactive 3D presentations and games

3D interactive

media: desktop, web, VR / AR equipment, mobile, game consoles

- virtual interaction with products

- interactive product configuration

- user controlled walkabouts

- online interaction between users

- customised presentation environment

- entirely custom UI design

- any input devices

- applications for marketing, sales, training and support

- automated software updates

- platforms: Windows, macOS, HTML5 / WebGL, Android, iOS

3D animation

media: desktop, web, TV

- animation of product functions and technical highlights

- animation of process flows

- dynamic display of components by fading

- animation of fluids und particle effects

- seamless integration of video footage

- video output by default in 4K or 8K

- basis for 3D interactive

3D visualisation / illustration

media: desktop, web, print

- consistent presentation of products and product options

- clear presentation and modular configuration of large-scale facilities

- visualisations of construction projects

- illustration of processes or process flows

- abstraction of complex processes

- sectional and exploded views

- visualisations created in parallel with product development

fluid / particle systems

Particle systems are used for the simulation of liquids and gases in the virtual workspace. With emitters, the actual sources of the particles, the desired particle clouds are generated. This can happen in the open space or depending on surfaces or within defined volumes. Spawn rate, lifespan, speed, density, and viscosity are defined for each source. Effects such as turbulence, gravity and wind can be used specifically to influence the behaviour of the particles after spawn. For liquids, depending on the distance, polygonal surfaces get generated between the individual particles. Increasing the particle density for the simulation, creates more accurate results.

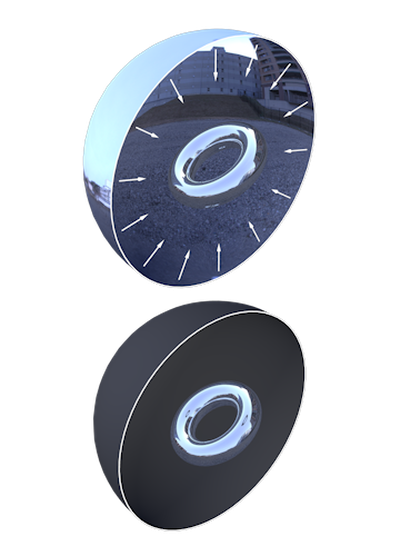

environment capture

Panoramic captures or individual photos of a location can be used as environment in the virtual workspace. In most cases panoramic captures are created from distortion-free individual HDR images that are combined by stitching and prepared for the spherical projection. In the virtual workspace the panoramic captures are projected onto a spherical shape that surrounds the scene. Contents of individual photos can be positioned in a scene flexibly to reproduce adjacent areas.

3D CAD

3D models created for the technical design and construction of a product can directly be used for visualizations and animations. For interactive productions, the models serve as a basis and get optimized accordingly. For larger assemblies it is useful to remove invisible parts or parts that are not involved into the process before they are exported. The optimal 3D format for the export is determined depending on the CAD system in use.

objects

Dependent on the shape, objects can be transferred into the 3D workspace by means of manual measurement and the subsequent modelling or the use of a scanning process. Technical shapes are more likely to be measured manually, whereas the use of scanners makes sense for natural and organic shapes.

2D CAD

Blueprints made with 2D CAD systems are ideal for the creation of 3D models. All geometries and dimensions can be transferred directly into the 3D space.

sketches / photos

Sketches are a common way to visualise ideas and concepts. Together with photos of similar objects or shapes, they provide a good basis for the creation of 3D models. During modelling, all inputs can be considered. Based on interim results in 3D the final shapes are determined step by step. It’s possible at any time, to further develop the shapes to represent the initial ideas as accurately as possible.

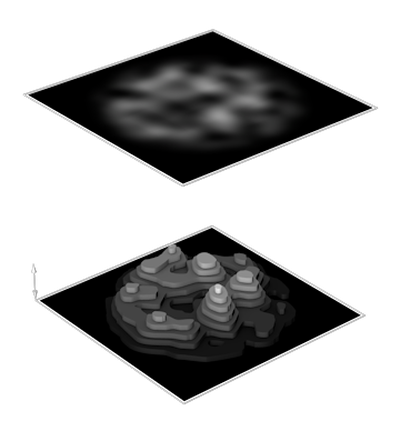

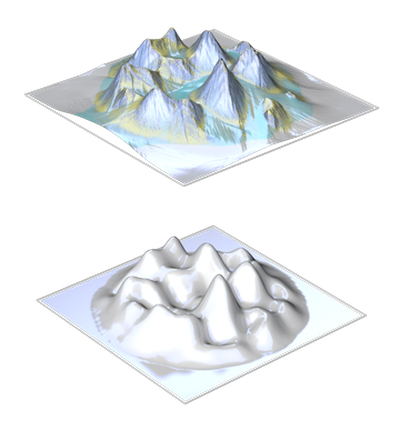

grayscale heightmaps

A heightmap is a grayscale relief that represents landscapes or surfaces. Dark shades indicate low areas and light shades indicate high areas. The height accuracy depends on the colour range of the image. A grayscale image with an 8-bit colour range contains 255 shades and one with 16-bit colour range contains 65'535 shades. The resolution determines the accuracy of the area. A pixel is the smallest representable length, which corresponds to a certain measurement in the real world. Heightmaps based on data from satellite surveying and aerial photography are available for the entire surface of Earth.

3D scan

In addition to classic modelling, various scanning methods are available to transfer real world objects into the 3D workspace. 3D scans are ideally suited for the transfer of natural and organic shapes. Along a virtual grid on a surface points get measured by laser. The result is a huge amount of measuring points (point cloud), which then allows the generation of a polygonal 3D model. A simple alternative to laser measurement is the photogrammetry. The objects are photographed from different angles using a specific lighting setup. Subsequently, the 3D model is calculated based on matching pixels across the different pictures. The recorded photo data additionally can be used to materialize the 3D models.

modelling

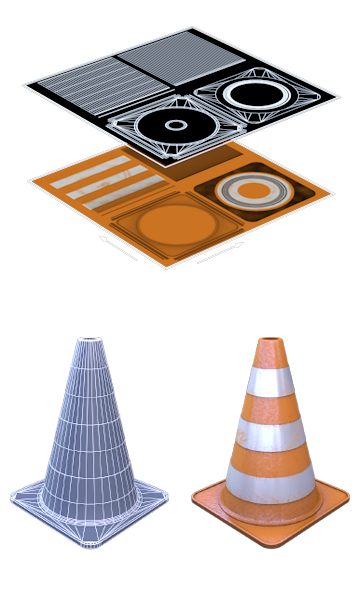

The 3D models get created based on the available data. The modelling process involves a variety of tools, comparable to manufacturing methods in reality. Each face of a 3D model is defined by three points and is called polygon. A rectangular area thus consists of two polygons. The more accurate a shape should be represented, the more polygons / subdivisions are required. Shapes based on rectangles, circles or splines are largely automated. For freeform surfaces, each point of a model can be defined individually. This is usually done with a few subdivisions and a small number of polygons. Afterwards the model can be extrapolated to the desired quality. Surfaces automatically get subdivided further during this step. In addition, deformers which allow the deformation of models in certain areas (bend, compress, expand, etc.) are available.

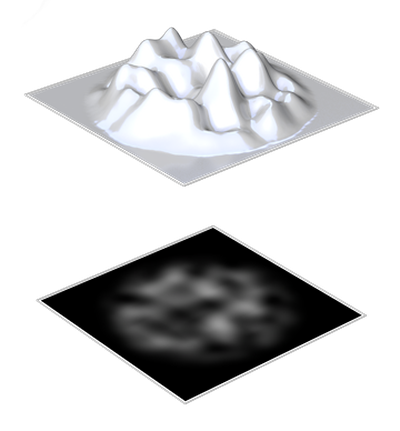

heightmap generation

For the creation of heightmaps existing elevation data gets converted to grayscale or completely random landscapes get generated. Programs that visualize the grey values of the heightmaps directly as a 3D model simplify the development. With various noise functions and manual adjustments, realistic landscapes can be generated.

referencing / grouping

3D assemblies created for the technical design and construction of a product are usually grouped according to the installation or function. For 3D animations and 3D interactive it’s required to check whether all movements can be performed with the existing structure. If necessary, further movement groups get created. In a second step, the individual parts get sorted per assembly according to their material. Existing material assignments get reused whenever possible. Assemblies with multiple applications get optimised once and referenced as instances at the different positions.

motion capture

The capturing of movements in reality and the subsequent playback in the virtual workspace, produce extremely natural results. During the execution of movements, the positions of different reference points on objects get captured continuously. The captured movements can then be transferred directly to a skeleton in the virtual workspace which corresponds to the real structure.

storyboard

In advance to a project, the storyboard captures all specifications. Based on the key message the camera settings, perspectives, movements, materialization, lighting, duration, audio and environment are derived per scene. For 3D interactive the storyboard also contains the definition of all interactions and the specification of the movement concept.

rigging

For animations that affect the shape of a 3D model on the polygonal level, the individual points of the 3D model get assigned to a skeleton. A composition of skeleton and 3D model is called character. As in reality, a skeleton represents the supporting structure of an object and consists of bones and joints. The surface of a 3D model can be compared with the skin. Instead of the elaborate animation of individual model points, the bones and joints get animated while the surface follows the assigned bone automatically. Individual model points may be under the influence of multiple bones. For example, a surface point near a joint may follow the bone before the joint for 40% and the position of the bone after the joint for 60%. All assignments are stored in a weighting table called vertex map. Constraints that allow the restriction of the movement space or the definition of dependencies to other joints can be defined for every joint. This also allows the creation of inverse kinematics systems to calculate bone chains in opposite direction. For example, to automatically calculate the movement of all bones back to the shoulder while moving a hand.

production data

To produce objects by 3D printing or machining processes, the 3D models are optimized to be able to provide manufacturing data in any scale. Optimizing may involve merging of individual 3D parts, reduction of details or the improvement of edges. The manufacturing data includes 3D models as well as technical blueprints or 2D outlines for laser processing and engraving.

animation / simulation

During animation all parameters along the time axis get recorded. With the classic key animation position, alignment, scaling or other parameters of the respective objects are defined key by key. To create the desired animation curves, different methods of interpolation are available. This allows to adjust the acceleration values before and after a key and therefore the creation of hard and soft transitions. The classic animation is supplemented by the definition of conditions, e.g. the alignment of objects to others or the positioning of objects along paths or on surfaces. If the activation of animation sequences is dependent on user inputs or other events, the required dependencies can be programmed accordingly. The playback of the animation sequences can then take place at any time and is not bound to a fixed position in the time axis anymore. For the physical simulation of forces in real time, such as gravity, the classic animation is not required. The position of the objects is calculated automatically dependent on predefined simulation values such as weight, friction, etc.

corporate standards

Graphical specifications given by the corporate design are taken into account throughout the process. In addition, njb design® supports its customers with the implementation and definition of standards for 3D media. Colours and logos can always be kept up to date. New content and updates are implemented to be compatible with existing media assets.

environment

The environment of a scene has a significant impact on the appearance of a visualization. Environments can affect material properties like reflections and be used as skylights for the global illumination of a scene or be used background elements that don’t affect the content of a scene. Spheres that surround the entire scene are used to display panoramic HDR images or parametric sky shaders that generate environments based on the date, time and weather. Apart from the global environment any 3D object can be used as environment asset in a scene to specifically affect material properties or lighting.

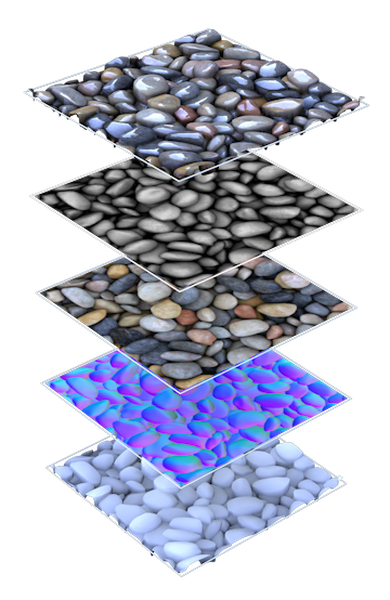

surface scan / photo

The capturing of surfaces for the creation of virtual materials mostly happens by photographic means. A uniform illumination of the surfaces to minimize shading and reflections is crucial during the capture. In addition to the capturing of colour values, surfaces can also be captured in 3D using photogrammetry or other scanning methods. The resulting 3D model can be deployed directly or utilised for the creation of bump and normal maps.

parametric shader

Unlike materials based on photographic captures, parametric shaders get calculated for every position on a surface based on mathematical functions (procedural) and are therefore independent of a fixed pixel resolution. Noise functions like Perlin, Simplex or Voronoi, that generate the desired patterns based on a start value (seed) are used for the calculation. Global parameters allow the adjustment of the scale or the accuracy of the calculation.

texture processing

A texture is usually a square image in which the opposite sides are compatible. These seamless textures, which can be projected onto 3D models as endless patterns, are generated by the combination of different photos of a surface. If 3D data has been captured together with the colour values, it can be utilised for the creation of bump and normal maps, which then also become part of the seamless texture. To reduce the amount of textures and memory requirements for 3D interactive, different surface types can be combined in one texture file. These textures are not seamless and get created specifically per UV map of a 3D model.

materializing

A material (shader) consists of different channels that define the visual properties of a surface. The channels include colour (diffusion), specularity, reflection, transparency and refraction, various methods of deformation, masking and emissive. Each channel can be adjusted individually for every position on a surface. Deformations can be simulated by the projection of shades on the surfaces (relief / normal) or by means of parallax. In addition, it’s also possible to deform polygons of a 3D model directly based of a bump map using displacement. Materials can be assigned to entire assemblies, individual parts or polygon surfaces. For 3D models with UV map, it’s possible to draw directly on the model surface and all available channels. Shadows and other lighting effects can be pre-rendered and projected on the model surfaces using lightmaps (backing). For the simulation of physics, in addition to visual properties values for density, friction and elasticity of a surface can be defined.

lighting

The illumination of a scene is dependent on the central theme and the viewing direction. A classic setup includes a master light for the basic illumination, brightener to extend the basic illumination and effect lights to highlight edges or specific areas. For each light source intensity, colour, shape and shadow can be adjusted individually. Among the available types of light sources are point lights, which emit light in all directions, spotlights, which form cones of light or area lights, which emit light based on the shape of objects. With IES lighting profiles it’s also possible to represent the shape of real light sources. Light can be visualized in the virtual workspace using volumetric lights. In addition to individual light sources, global skylights can be used (see environment).

rendering

Rendering calculates the 3D models, materials and lighting properties for the final image output. Starting from all light sources, the lighting for the entire scene is derived by using ray tracing. With global illumination, light rays can be traced several times after impacting surfaces. Depending on the surface properties and the angle of incidence, a certain amount of light is passed on. Different effects allow the specific adjustment of the results. Ambient occlusion for example, allows the automated generation of shading dependent on the angle and the distance between surfaces. In addition, methods are in use to optimize the transfer of the results into the pixel space and the playback of individual frames as animations (antialiasing, motion blur). The duration of the image calculation depends on the final pixel resolution, the accuracy and depth of the light calculation, the complexity of the models and materials, and the effects in use. For every object in a scene the duration of the calculation can be optimized, for example, dependent on the distance to the camera.

compiling

Compiling converts all functions and contents of a project into executable programs dependent on the engine or programming language used. Applications can be generated for different platforms like Windows, macOS, Android and iOS. Interactive online applications can be output as HTML5 / WebGL projects.

graphic processing

In graphic processing the renderings get prepared according to the final usage and exported in the desired image formats. Renderings with 32-bit colour depth (HDR) allow the targeted optimization of contrast, brightness and colour values during conversion to 8-bit formats for print. Light and effect channels allow the subsequent adjustment of the intensity for individual light sources and effects. Individual objects of a scene can be kept on separate layers and displayed with transparent background by masking. When combining 3D objects with photos, the shadow cast of objects can be integrated into the photo environment.

audio / video

Audio and video data can be used to complement 3D animations and 3D interactive. Background music supports the flow of an animation and can provide the timing for movements or video editing. Voice recordings are used for auditory information or in dialogues. For 3D interactive, the playback of video and audio sequences can be dependent on user actions, inputs, or certain values. Video data seamlessly can be combined and enhanced with 3D content.

video editing

During video editing, the final composition of video, image and audio content gets created. The individual scenes get prepared and are combined optimally by means of video cutting. Vector graphics such as logos and text overlays are used according to graphical specifications. With motion tracking, elements can continuously be aligned to the image content. For transitions between scenes and different media, a variety of effects and types of fading are available. The video and audio content get matched during synchronization.

encoding

Based on the final composition, the desired video formats are exported. The video format, compression, resolution and framerate are determined dependent on the final usage. The output can occur with different profiles up to 8K (7680x4360 pixels) at 60fps. A common video format is MP4 with H.264 compression.

3D visualisation / illustration

High resolution images for desktop, web and print.

3D animation

Video presentations for web, desktop and TV.

3D interactive

Interactive applications for desktop, web, VR / AR equipment, mobile and game consoles.

erosion simulation

Heightmaps can be exposed to various forms of erosion. This includes liquid-based river erosion, which forms channels and valleys, aeolian erosion, which wears off rocks by dust particles in the wind and near-shore erosion caused by the surf of seas. Various factors such as duration of the erosion, hardness of the rock and density of the ablated material are considered in the simulation. In addition to the eroded heightmaps it’s possible to generate masks for the subsequent texturing of the landscapes, that represent the individual areas such as erosion channels, rock, debris, etc.

staging

According to storyboard, layouts and company standards, all objects and cameras are positioned, and the perspectives are set for each scene. Virtual cameras offer settings that are comparable to those of cameras in reality (focal length, aperture, etc.). In addition, the sensor format can be set freely, and all camera settings can be animated. For animated camera flights the positions of the cameras are set along the time axis similar to the animation of objects.

mapping

In order to display materials on surfaces of 3D models, flat projections are created in 2D space. A flat projection of a 3D model is called UV map, where the letters U and V represent the names of the 2D axes. For domed free-form surfaces, which can’t be displayed in 2D space without segmentation, a certain amount of deformation is accepted. This preserves the continuity of the surfaces in 2D space and ensures the seamless projection of materials. For 3D models with multiple material assignments, the UV maps can be edited per material. In addition to the UV mapping projection, simplified methods that are independent of the shape of the object are available. The materials can then be projected on the model surfaces based on a 2D plane, spherical, cubical or cylindrical shape.

UI / programming

For 3D interactive and games, user actions and inputs can be assigned to specific functions within a project. Functions can be triggered dependent on values, for example the position of a user in virtual space or by any input device (touchscreen, mouse, keyboard, gamepad, VR / AR equipment, etc.). UI elements such as buttons, input fields, checkboxes, etc. allow the adjustment of settings and processing of user dialogs. At regular intervals or defined events, variables can be stored or accessed locally or in the cloud via database connection.